Just got a GTX 680 will my system bottle neck it?

This topic is locked from further discussion.

Why do you buy a GPU if you don't know whether it will be bottle necked or not? Silicel1thats not the question I asked. obviously you dont know the answer. why even post?

[QUOTE="Silicel1"]Why do you buy a GPU if you don't know whether it will be bottle necked or not? ProjectPat187thats not the question I asked. obviously you dont know the answer. why even post? Sorry but buying something that you know nothing about is stupid.

[QUOTE="ProjectPat187"][QUOTE="Silicel1"]Why do you buy a GPU if you don't know whether it will be bottle necked or not? Silicel1thats not the question I asked. obviously you dont know the answer. why even post? Sorry but buying something that you know nothing about is stupid. so what, again thats not the question, its not like it wont work in my PC, how about you stop posting because its obvious you are a troll.

thanks so much for the response guys, except that one troll who came to post useless info.ProjectPat187All he did was ask a god damn question and then stated his opinion, your responses while not making you a troll make you look like an ass btw

[QUOTE="ProjectPat187"]thanks so much for the response guys, except that one troll who came to post useless info.Super-TrooperAll he did was ask a god damn question and then stated his opinion, your responses while not making you a troll make you look like an ass btw and all I did was ask a god damn question and not for his opinion or yours, Troll

ah cool, thanks a bunch man, just want I was looking for.And games don't even use virtual threads in game(hyperthreading)... :lol:

So loosing 30% of performance when not using PCI-E 3.0 and not having a hyperthreaded cpu :lol:

MonsieurX

[QUOTE="ProjectPat187"]thanks so much for the response guys, except that one troll who came to post useless info.Super-TrooperAll he did was ask a god damn question and then stated his opinion, your responses while not making you a troll make you look like an ass btw He made a fair point. Who buys a $500 piece of equipment without knowing if it will work properly..?

a card not working properly vs how much performance you get out of it are two totally different things. ProjectPat187You're right about that, but not about the other thing. If you can stand a little unsolicited advice from a stranger, don't feed the trolls, and don't call them trolls. That's name-calling, and sometimes what looks like a troll can be brought around with patience and courtesy.

[QUOTE="ClashoftheConso"]Yes. For full performance, you'll need either a Z76 or z79 Socket mobo, [True] PCie 3.0, and a Hyper Threading Processor [like the Intels QuadCore or SixCore i7]. If you're not using a Socket Z76 or z79 with 3.0, you lose between 15-20% of a Kepler GPU's performance. And just because a board has PCie 3.0 doesnt mean its faster - I have a ASUS MAXIMUS Extreme KOG mobo, it HAS PCie 3.0, BUT it's a Socket z68. So It isnt really any faster than if my board was PCie 2.0. It has to be a newer LGA 1155 Z76 board like Intel Series 7, or a Socket 2011 z79. And If you're CPU isn't 'Hyper Threading' like my Intel QuadCore i5 2500k (Gen 2), then you lose another 10-15% performance. It all boils down to the fact that newer GPUs (like the GTX 680), use different arcitecture what really requires alot more bus, other wise you're bottleknecked. The 'newer' cards are far, far, far more powerful in regards to power consumtion and latency vs raw power and transfer rate and quantity. Think of you're old GPU as a Tanker Truck and you're old PCie 2.0 and z67 or z69 mobo as a one-way road 25 mph. Now, Think of you're NEW GPU as three Tanker Trucks @ 25 mph down that same road....can't happen, the road just woulnt fit them all. So you're new mobo is a that three-lane road you need...better now. Finally you're CPU is the Speed Limit, if you have the right one, imagine those three Tanker Trucks on that same Three Lane, but now there going at 40 mph. Now on the brightside: I have a PNY GTX 680, myself. And I can tell you that for all the alleged bottlenecking, that on a board like ours, my GPU runs blazing fast. I can EASILY run ANY game on the market at Ultra settings, modded and with FXAA cranked. I play Battlefield 3 @90 FPS on Ultra, The Witcher 2 on Ultra, with ALL settings on (including 'uber AA') at 72 FPS, Batman Arkham City on Ultra, and PhysX @ 90FPS, and Metro 2033 on Ultra & PhysX @ 62fps - on a 1080p 240hz LED! So don't even worry about the bottle knecking. Besides, in about 2 yrs i7 SixCores and LGA 2011 mobos will be affordable and all you have to is simply upgrade them for that 35-45% performance boost.....or nab another GTX 680 when there $300 by then.ProjectPat187thanks so much for the well informed post, this definitely helped. my system specs are Intel i7 920 6 x GB G.Skill memory 1333 Asus PT6 Deluxe Mobo GTX 285 running at 1920x1080p so just to clarify, I should return the GTX 680 and just get the GTX 580 for now since it is PCI Express 2.0? ill just get the GTX 680 again when I build me a new system at the end of the year.

That was not a well-informed post. It was a bunch of BS wrapped in pretty paper to make it sound like truth. Almost none of what he said translates to the real world in any way.

And as I mentioned, without the perfect combo of the right Mobo and CPU, PCie 3.0 has little to no gains over 2.0 with a 3.0 card. Hardware Canucks did that test with a older Mobo and CPU, it isnt fair to compare to stuff just coming out. The GTX 680 has arguably been released before manufacturers have had a chance to release the parts to complement it. Give it a year and those PCI 2.0 vs 3.0 Benchmarks will be different. People said the same thing about 6 core vs 4 core cpu when thethe AMD 6 core cameout (about it being slower than Intels i5/i7 Quad?). Yet here we are a year later and intels 6 core is out and people are singing a different tune. Give it time to mature.ClashoftheConso

Considering that there is little to no appreciable performance difference between PCI-E x8 and x16 in many situations, I find that statement highly unlikely.

pci-e 2.0 wont bottleneck ur new gpu, but ur cpu at stock speeds will bottleneck it in most games. Still, its not worth upgrading ur computer, its already great as it is.

Yes. For full performance, you'll need either a Z76 or z79 Socket mobo, [True] PCie 3.0, and a Hyper Threading Processor [like the Intels QuadCore or SixCore i7]. If you're not using a Socket Z76 or z79 with 3.0, you lose between 15-20% of a Kepler GPU's performance. And just because a board has PCie 3.0 doesnt mean its faster - I have a ASUS MAXIMUS Extreme KOG mobo, it HAS PCie 3.0, BUT it's a Socket z68. So It isnt really any faster than if my board was PCie 2.0. It has to be a newer LGA 1155 Z76 board like Intel Series 7, or a Socket 2011 z79. And If you're CPU isn't 'Hyper Threading' like my Intel QuadCore i5 2500k (Gen 2), then you lose another 10-15% performance. It all boils down to the fact that newer GPUs (like the GTX 680), use different arcitecture what really requires alot more bus, other wise you're bottleknecked. The 'newer' cards are far, far, far more powerful in regards to power consumtion and latency vs raw power and transfer rate and quantity. Think of you're old GPU as a Tanker Truck and you're old PCie 2.0 and z67 or z69 mobo as a one-way road 25 mph. Now, Think of you're NEW GPU as three Tanker Trucks @ 25 mph down that same road....can't happen, the road just woulnt fit them all. So you're new mobo is a that three-lane road you need...better now. Finally you're CPU is the Speed Limit, if you have the right one, imagine those three Tanker Trucks on that same Three Lane, but now there going at 40 mph. Now on the brightside: I have a PNY GTX 680, myself. And I can tell you that for all the alleged bottlenecking, that on a board like ours, my GPU runs blazing fast. I can EASILY run ANY game on the market at Ultra settings, modded and with FXAA cranked. I play Battlefield 3 @90 FPS on Ultra, The Witcher 2 on Ultra, with ALL settings on (including 'uber AA') at 72 FPS, Batman Arkham City on Ultra, and PhysX @ 90FPS, and Metro 2033 on Ultra & PhysX @ 62fps - on a 1080p 240hz LED! So don't even worry about the bottle knecking. Besides, in about 2 yrs i7 SixCores and LGA 2011 mobos will be affordable and all you have to is simply upgrade them for that 35-45% performance boost.....or nab another GTX 680 when there $300 by then.ClashoftheConso

Wth are you even talking about...

[QUOTE="Super-Trooper"][QUOTE="ProjectPat187"]thanks so much for the response guys, except that one troll who came to post useless info.ProjectPat187All he did was ask a god damn question and then stated his opinion, your responses while not making you a troll make you look like an ass btw and all I did was ask a god damn question and not for his opinion or yours, Troll Projectpat187 you don't even know what damn Troll is the way you throw your worthless words around.... Everyone knows Silicel1 is NOT A TROLL and neither is SUPERTROOPER. So why don't you get your lame ass out of here and back to the noob forum where you belong?

Yes. For full performance, you'll need either a Z76 or z79 Socket mobo, [True] PCie 3.0, and a Hyper Threading Processor [like the Intels QuadCore or SixCore i7]. If you're not using a Socket Z76 or z79 with 3.0, you lose between 15-20% of a Kepler GPU's performance. And just because a board has PCie 3.0 doesnt mean its faster - I have a ASUS MAXIMUS Extreme KOG mobo, it HAS PCie 3.0, BUT it's a Socket z68. So It isnt really any faster than if my board was PCie 2.0. It has to be a newer LGA 1155 Z76 board like Intel Series 7, or a Socket 2011 z79. And If you're CPU isn't 'Hyper Threading' like my Intel QuadCore i5 2500k (Gen 2), then you lose another 10-15% performance. It all boils down to the fact that newer GPUs (like the GTX 680), use different arcitecture what really requires alot more bus, other wise you're bottleknecked. The 'newer' cards are far, far, far more powerful in regards to power consumtion and latency vs raw power and transfer rate and quantity. Think of you're old GPU as a Tanker Truck and you're old PCie 2.0 and z67 or z69 mobo as a one-way road 25 mph. Now, Think of you're NEW GPU as three Tanker Trucks @ 25 mph down that same road....can't happen, the road just woulnt fit them all. So you're new mobo is a that three-lane road you need...better now. Finally you're CPU is the Speed Limit, if you have the right one, imagine those three Tanker Trucks on that same Three Lane, but now there going at 40 mph. Now on the brightside: I have a PNY GTX 680, myself. And I can tell you that for all the alleged bottlenecking, that on a board like ours, my GPU runs blazing fast. I can EASILY run ANY game on the market at Ultra settings, modded and with FXAA cranked. I play Battlefield 3 @90 FPS on Ultra, The Witcher 2 on Ultra, with ALL settings on (including 'uber AA') at 72 FPS, Batman Arkham City on Ultra, and PhysX @ 90FPS, and Metro 2033 on Ultra & PhysX @ 62fps - on a 1080p 240hz LED! So don't even worry about the bottle knecking. Besides, in about 2 yrs i7 SixCores and LGA 2011 mobos will be affordable and all you have to is simply upgrade them for that 35-45% performance boost.....or nab another GTX 680 when there $300 by then.ClashoftheConso

Seriously don't even think about it.

Your System is fine and you won't see a difference at all. Keep that monster of a card.

Keep it or wait for the 690 :)acanofcokeTake it from someone who knows a thing or two about dual gpu's... avoid them.

[QUOTE="acanofcoke"]Keep it or wait for the 690 :)DevilMightCryTake it from someone who knows a thing or two about dual gpu's... avoid them.

Maybe you've had a bad experience...

[QUOTE="Bebi_vegeta"]

[QUOTE="DevilMightCry"] Take it from someone who knows a thing or two about dual gpu's... avoid them.GarGx1

Maybe you've had a bad experience...

Unless it's free ;)

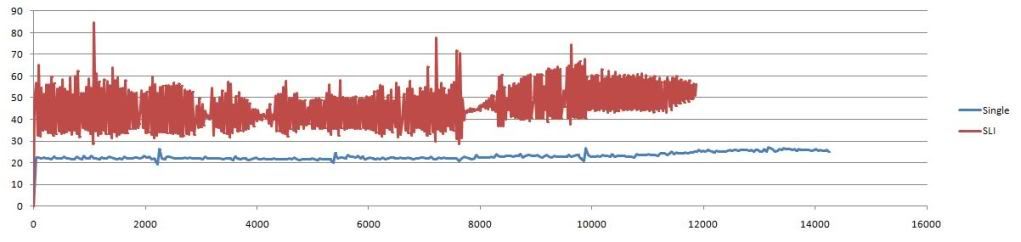

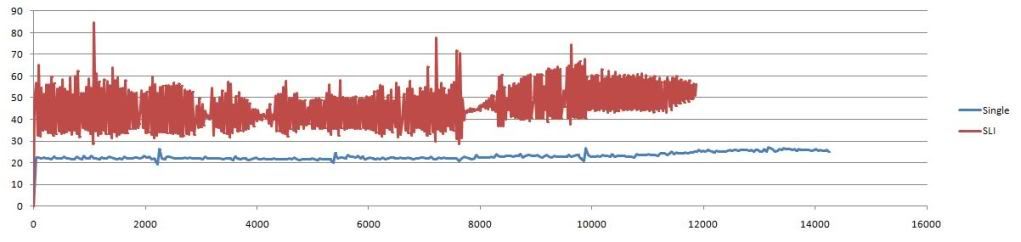

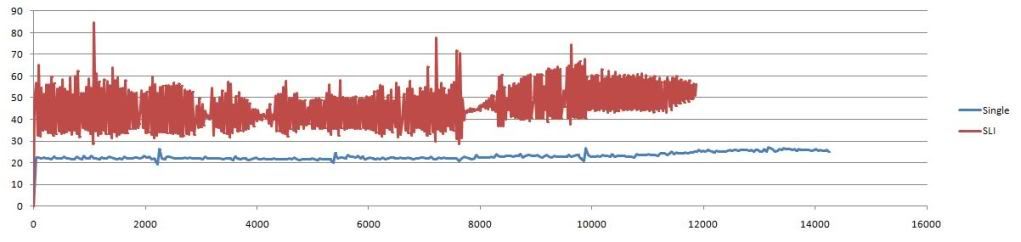

Personnally, I've had a lot of problem with microstuttering with my old gtx 260s, but they were also victims of my stupid suicidal PSU, so I'm mabey an isolated case. Calculating the time frame with a few games with Fraps was scary.

[QUOTE="ProjectPat187"][QUOTE="Silicel1"]Why do you buy a GPU if you don't know whether it will be bottle necked or not? Silicel1thats not the question I asked. obviously you dont know the answer. why even post? Sorry but buying something that you know nothing about is stupid. :lol: hes right you know

[QUOTE="GarGx1"]

[QUOTE="Bebi_vegeta"]

Maybe you've had a bad experience...

Idontremember

Unless it's free ;)

Personnally, I've had a lot of problem with microstuttering with my old gtx 260s, but they were also victims of my stupid suicidal PSU, so I'm mabey an isolated case. Calculating the time frame with a few games with Fraps was scary.

[QUOTE="Idontremember"]

[QUOTE="GarGx1"]

Unless it's free ;)

mitu123

Personnally, I've had a lot of problem with microstuttering with my old gtx 260s, but they were also victims of my stupid suicidal PSU, so I'm mabey an isolated case. Calculating the time frame with a few games with Fraps was scary.

I hope so!!!!

Take it from someone who knows a thing or two about dual gpu's... avoid them.[QUOTE="DevilMightCry"][QUOTE="acanofcoke"]Keep it or wait for the 690 :)Bebi_vegeta

Maybe you've had a bad experience...

He probably did. I had a pleasant experience with GTX 460 1GB SLi. But those cards were heaven-sent, at least for me. DevilMightCry has somewhat of a point though. If you could avoid them, why not? Go with a strong single GPU that will be able to handle your gaming needs. Two GPU's in sli/crossfire are more likely to run into problems than a single GPU. On top of that, dual, tri, quad GPU setups produce a ton of heat. I'm looking at you non-reference cards, unless you have a good PC case.[QUOTE="Bebi_vegeta"][QUOTE="DevilMightCry"] Take it from someone who knows a thing or two about dual gpu's... avoid them.Elann2008

Maybe you've had a bad experience...

He probably did. I had a pleasant experience with GTX 460 1GB SLi. But those cards were heaven-sent, at least for me. DevilMightCry has somewhat of a point though. If you could avoid them, why not? Go with a strong single GPU that will be able to handle your gaming needs. Two GPU's in sli/crossfire are more likely to run into problems than a single GPU. On top of that, dual, tri, quad GPU setups produce a ton of heat. I'm looking at you non-reference cards, unless you have a good PC case.Good points... but usualy getting a second card is the cheapest option for benefits.

He probably did. I had a pleasant experience with GTX 460 1GB SLi. But those cards were heaven-sent, at least for me. DevilMightCry has somewhat of a point though. If you could avoid them, why not? Go with a strong single GPU that will be able to handle your gaming needs. Two GPU's in sli/crossfire are more likely to run into problems than a single GPU. On top of that, dual, tri, quad GPU setups produce a ton of heat. I'm looking at you non-reference cards, unless you have a good PC case.[QUOTE="Elann2008"][QUOTE="Bebi_vegeta"]

Maybe you've had a bad experience...

Bebi_vegeta

Good points... but usualy getting a second card is the cheapest option for benefits.

Another good point. GTX 460 1GB SLi was probably the best bang for buck dual gpu setup out there on the Nvidia side. They performed better than a single GTX 580 in most games, and costed much less than a GTX 580 (then, as we remembered was $500, not the $400 price point today).Ohh, I was talking about dual GPU's, like GTX295 COOP to be more specific, not SLI.DevilMightCry

Dual-GPU single cards are SLI. They're just SLI without using an extra slot. Whether you're using 2 separate cards or a single card with 2 GPUs, you'll run into the same issues.

[QUOTE="DevilMightCry"]Ohh, I was talking about dual GPU's, like GTX295 COOP to be more specific, not SLI.hartsickdiscipl

Dual-GPU single cards are SLI. They're just SLI without using an extra slot. Whether you're using 2 separate cards or a single card with 2 GPUs, you'll run into the same issues.

Although thats true, dual gpu's use less power than an sli setup. A lot of people can run into stuttering issues, etc because of a poor PSUPlease Log In to post.

Log in to comment