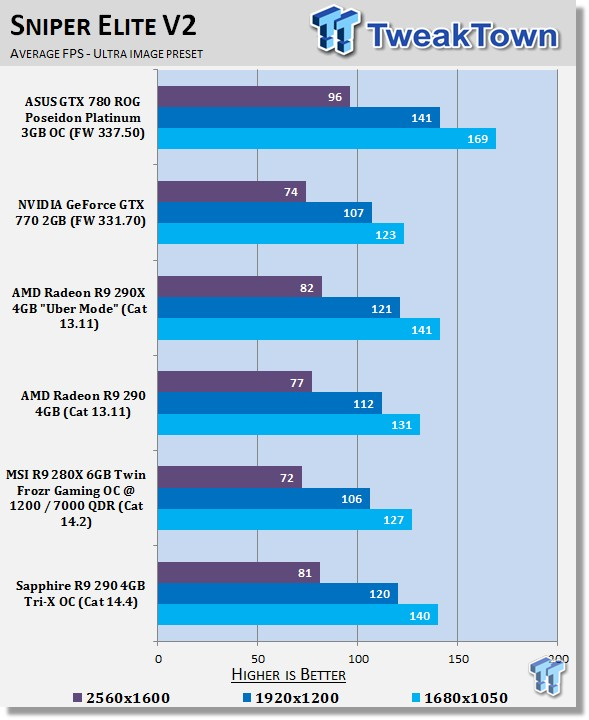

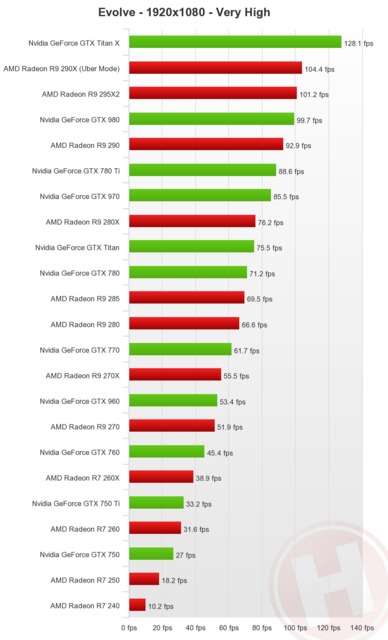

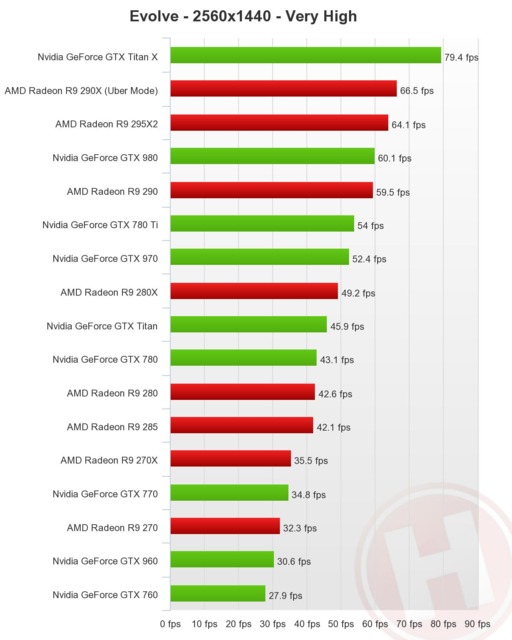

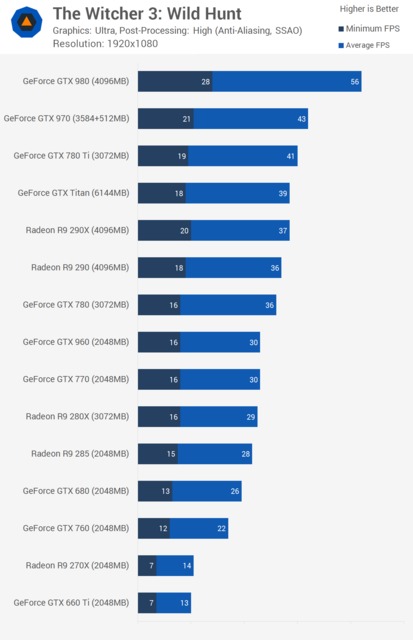

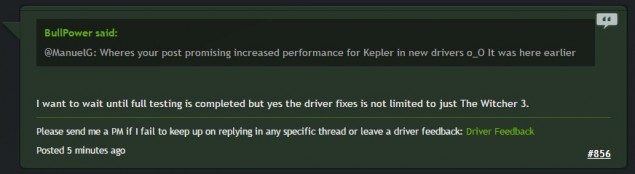

The top-tier GTX 780, which is supposed to be outperforming the R9 290, is being outbenched by the -at best- second-tier R9 280X. Although it was the Witcher 3 that started this shitstorm, the 700 series has been going downhill for more than half a year now. In fact, 280X was trading blows with the GTX 780 when Far Cry 4 was released.

Just look at these benchmarks

http://www.techspot.com/review/1006-the-witcher-3-benchmarks/page4.html

http://www.techspot.com/review/917-far-cry-4-benchmarks/page4.html

http://www.techspot.com/review/991-gta-5-pc-benchmarks/page3.html

http://www.techspot.com/review/921-dragon-age-inquisition-benchmarks/page4.html

Just to put things into perspective, look at these older benchmarks and notice the difference between the 780/680 and the 280X

http://www.techspot.com/review/733-batman-arkham-origins-benchmarks/page2.html

http://www.techspot.com/review/615-far-cry-3-performance/page5.html

http://www.techspot.com/review/642-crysis-3-performance/page4.html

For the Crysis 3 benchmark, the 680 (not even the 780) blows the 7970 out of the water! 7970 is basically the same card as the 280X. Nowadays, the 7970 shits on both 680 AND the 780. Either AMD guys are wizards, or nvidia does not give a shit.

At lower resolutions and settings, the GTX 780 is the clear winner, but the higher you go in resolution and graphics quality, the worse the GTX 780 performs. The GTX 780 is not even in the same league as the R 290 anymore. Why should I keep my 2 GTX 780s when I can sell them, buy a couple of R 290s for their better performance, and make $100 in the process?

Unless nvidia explains what is happening and fixes the problem, I will never buy their products again, because either nvidia is doing some shady shit behindd the scenes to promote their Maxwell cards, or they simply build overpriced products that are clearly inferior to the competition, and that can't stand the test of time

And to the fanboys who keep repeating the same crap about "teh maxwell tessellation technology", please remember that you might find yourselves in a similar position a year from now when nvidia releases the new series of graphics cards.

Log in to comment