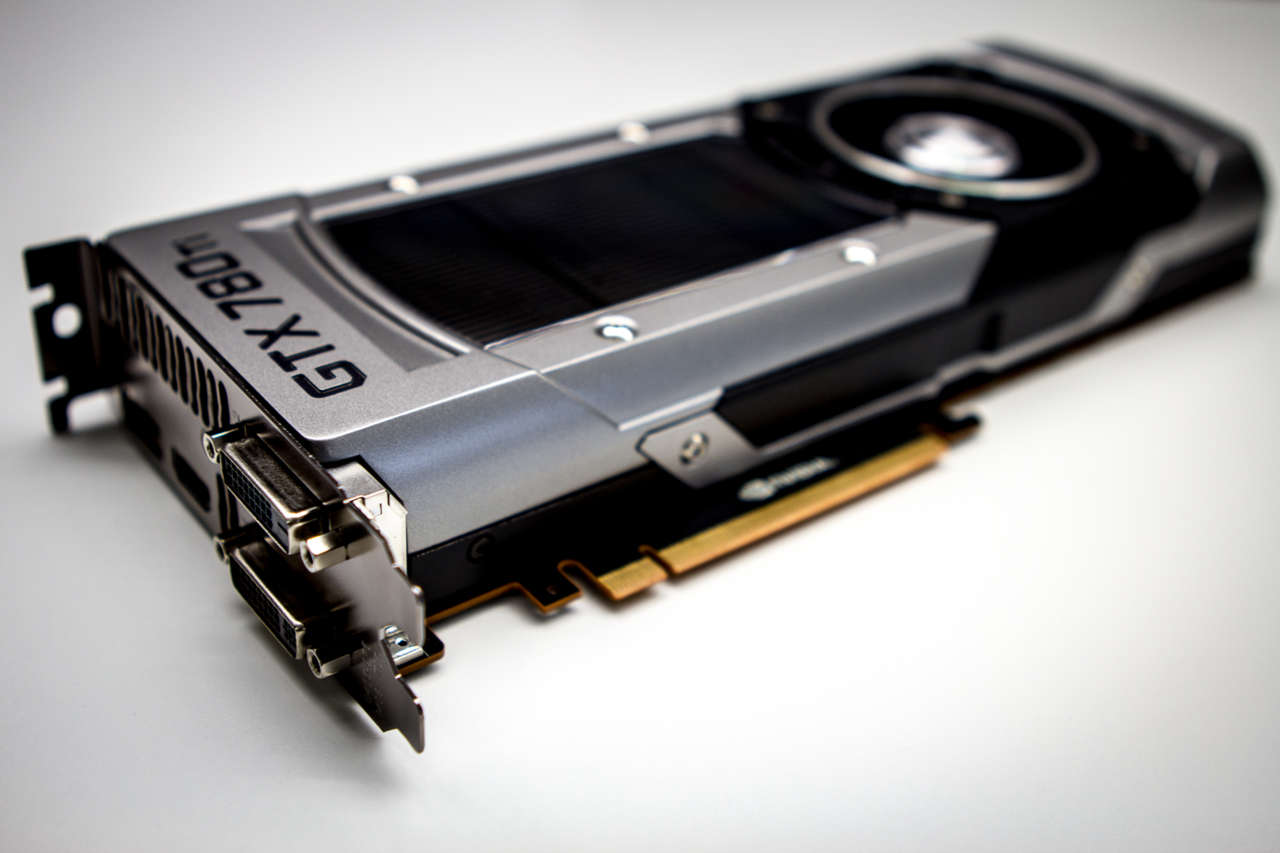

Nvidia GTX 780 Ti Review: A Powerful GPU With A Price To Match

The GTX 780 Ti just about pushes Nvidia back to the top of the GPU performance pile, but its price is far from competitive.

For the vast majority of PC players, 1080p is the benchmark for performance, and by far the most popular resolution in use for gaming (at least according to the latest Steam Hardware Survey). Games look great at 1080p, monitors are cheap and plentiful, and you don't need to spend a fortune on an insanely powerful GPU to drive them. But if you're running multiple monitors, high resolutions like 1600p or 4K, or if you're simply after some bragging rights, then the likes of a GTX 650 Ti or Radeon 7850 just aren't going to cut it.

Enter the GTX 780 Ti, the latest GPU from Nvidia based on the GK110 chip. That's the same full-fat Kepler chip used in the GTX Titan and GTX 780, both of which are already excellent performers at high-resolutions. The trouble is, they aren't the best performers anymore. AMD's latest R9 290X and R9 290 have benchmarked extremely well, not only taking the performance crown from their rival, but also seriously undercutting it in terms of price. Nvidia's latest round of price cuts evens the playing field somewhat, but there's nothing quite like the prestige of having "the world's fastest graphics card".

The 780 Ti, then, has a big job ahead of it. At an RRP of $699 (£559 in the UK), it's still around $100 more expensive than the 290X, so it isn't going to be winning any awards for value. In terms of performance, though, it's very impressive. The 780 Ti is the first GPU to make use of the entire GK110 chip, that is, the full 2880 single precision CUDA cores, 240 texture units, and 48 ROP units. Memory comes in the form of 3GB of extremely fast 7Gbps GDDR5 for 336GB/s of bandwidth, while the base clock speed gets a bump to 845Mhz, and the boost clock speed to 928Mhz. It does lack scientific features like HyperQ and high-end 64 bit performance, but on paper at least, the GTX 780 Ti is the most powerful gaming card Nvidia's released.

| GTX 780 Ti GPU Specs | GTX 780 Ti Memory Specs |

|---|---|

2880 CUDA Cores | 7.0 Gbps Memory Clock 3072 MB Standard Memory Config GDDR5 Memory Interface 384-bit GDDR5 Memory Interface Width 336 GB/s Memory Bandwidth |

| GTX 780 Ti Software Support | GTX 780 Ti Display Support |

| OpenGL 4.3 PCI Express 3.0 GPU Boost 2.0, 3D Vision, CUDA, DirectX 11, PhysX, TXAA, Adaptive VSync, FXAA, 3D Vision Surround, SLI-ready | Four displays for Multi Monitor 4096x2160 Maximum Digital Resolution 2048x1536 Maximum VGA Resolution Two Dual Link DVI, One HDMI, One DisplayPort |

| GTX 780 Ti Dimensions | GTX 780 Ti Power Specs |

| 10.5 inches Length 4.3 inches Height Dual-slot Width | 250 W TDP 600 W Recommended Power Supply One 8-pin and one 6-pin Power Connector |

Software

Like all of Nvidia's GPUs, the 780 Ti comes bundled with GeForce Experience (GFE), an application that automatically optimizes the graphics settings of your games based upon your hardware. GFE automatically updates your drivers and scans your games library for supported games, aiming to target settings that achieve 40 to 60 frames per second. Since its release earlier in the year, GFE's performance has improved by leaps and bounds, with many more supported games and optimal settings chosen. Naturally, you'll be able to eke out more performance by diving in and editing things manually, but if you're happy to let GFE do the job for you, the results are impressive.

Also part of the 780 Ti software package is ShadowPlay, a gameplay capture system that leverages the H.264 encoder built into Kepler (600, 700 series) GPUs. It automatically records the last 20 minutes of gameplay at up to 1080p60 at 50Mbps in automatic mode, but you can record as much footage as your hard drive allows in manual mode. ShadowPlay's also due to support direct streaming to Twitch.tv, although that feature isn't in the current beta. The advantage of using ShadowPlay over something like Fraps is CPU and memory usage. In our testing we found it affected the frame rate far less than Fraps did, in many cases with a hit of just a few frames per second. The software is still in beta, though, so we experienced a few capturing hiccups and crashes, but hopefully those issues will be ironed out before its full release.

There's also a great games bundle attached to the 780 Ti, with copies of Assassin’s Creed IV: Black Flag, Batman: Arkham Origins and Splinter Cell: Black List coming with every card. That's a sweet deal considering they're such current games, and hey, if you've already got them there's always the joy of gifting or selling on eBay.

Performance

Our trusty Ivy Bridge PC backed the GTX 780 Ti, although this time we overclocked the CPU to 4.2Ghz for a little extra oomph. A 1080p monitor would have been a waste for such a card, so we went with Asus' PQ321Q 4K monitor to really test its pixel-pushing power. With the exception of Crysis 3, all games were run at maximum settings and where possible we used FXAA for a performance boost. Call Of Duty: Ghosts was run at a lower resolution of 2560x1600, due to a current lack of 4K support.

| Motherboard | Asus P8Z68-V Motherboard |

| Processor | Intel Core i5 3570k @ 4.2Ghz |

| RAM | 16GB 1600Mhz DDR3 Corsair Vengeance RAM |

| Hard Drive | Corsair Force GT/Samsung Spinpoint F3 1 TB |

| Power Supply | Corsair HX850 PSU |

| Display | Asus PQ321Q @ 3840x2160/Dell 3007WFP-HC @ 2560x1600 |

Battlefield 4 (2x MSAA @ 3840x2160)

| Average FPS | Minimum FPS | Maximum FPS | |

| GTX 780 Ti | 32 | 24 | 44 |

| GTX Titan | 29 | 21 | 40 |

| GTX 780 | 26 | 13 | 36 |

Crysis 3 (High Settings, FXAA @ 3840x2160)

| Average FPS | Minimum FPS | Maximum FPS | |

| GTX 780 Ti | 30 | 24 | 44 |

| GTX Titan | 27 | 22 | 34 |

| GTX 780 | 25 | 20 | 37 |

Call Of Duty: Ghosts (HBAO+, FXAA @ 2560x1600)

| Average FPS | Minimum FPS | Maximum FPS | |

| GTX 780 Ti | 75 | 28 | 107 |

| GTX Titan | 76 | 47 | 104 |

| GTX 780 | 54 | 37 | 83 |

Bioshock Infinite (Ultra @ 3840x2160)

| Average FPS | Minimum FPS | Maximum FPS | |

| GTX 780 Ti | 50 | 34 | 67 |

| GTX Titan | 40 | 33 | 61 |

| GTX 780 | 30 | 25 | 61 |

Tomb Raider (Ultra @ 3840x2160)

| Average FPS | Minimum FPS | Maximum FPS | |

| GTX 780 Ti | 30 | 23 | 43 |

| GTX Titan | 29 | 21 | 39 |

| GTX 780 | 28 | 16 | 37 |

Metro: Last Light (Ultra @ 3840x2160)

| Average FPS | Minimum FPS | Maximum FPS | |

| GTX 780 Ti | 33 | 27 | 49 |

| GTX Titan | 29 | 25 | 37 |

| GTX 780 | 25 | 20 | 40 |

A Pricey Performer

As expected with such killer specs, the GTX 780 Ti screams through the likes of Battlefield 4 and Call Of Duty: Ghosts, even at 4K, easily beating the GTX 780 and even the $1000 Titan. It's an impressive showing for a card based on an architecture that's now well over a year and a half old, and represents the peak of Kepler's rendering abilities. While we unfortunately didn't have an AMD R9 290X on hand to make a direct comparison, judging by the benchmarks out there, the 780 Ti is a comparable card and once again places Nvidia within striking distance of, if not back at the top of GPU performance.

Such performance comes at a price, though. At over $100 more than the R9 290X and nearly $300 more than the similarly performing R9 290, the 780 Ti is an expensive choice. It's also $100 more expensive than the GTX 780, a GPU that's hardly a slouch when it comes to high-resolution performance. Yes, the 780 Ti is far more power-efficient than AMD's latest, and yes, it's a very quiet card in operation too, and we experienced none of the power throttling issues that are currently plaguing the R9 290.

Whether that's worth the extra cash, though, is debatable. No doubt about it, the GTX 780 Ti is a brilliant GPU backed by some brilliant software, but you can do a lot with that $100 saving (or even $300 if you plump for the R9 290). AMD's aggressive pricing has taken the shine off the GTX 780 Ti, but if you're all in for team green and have the high-res setup to do it justice, it's the absolute best you can get from Nvidia, and one of the best GPUs (a lot) of money can buy.

Got a news tip or want to contact us directly? Email news@gamespot.com

Join the conversation